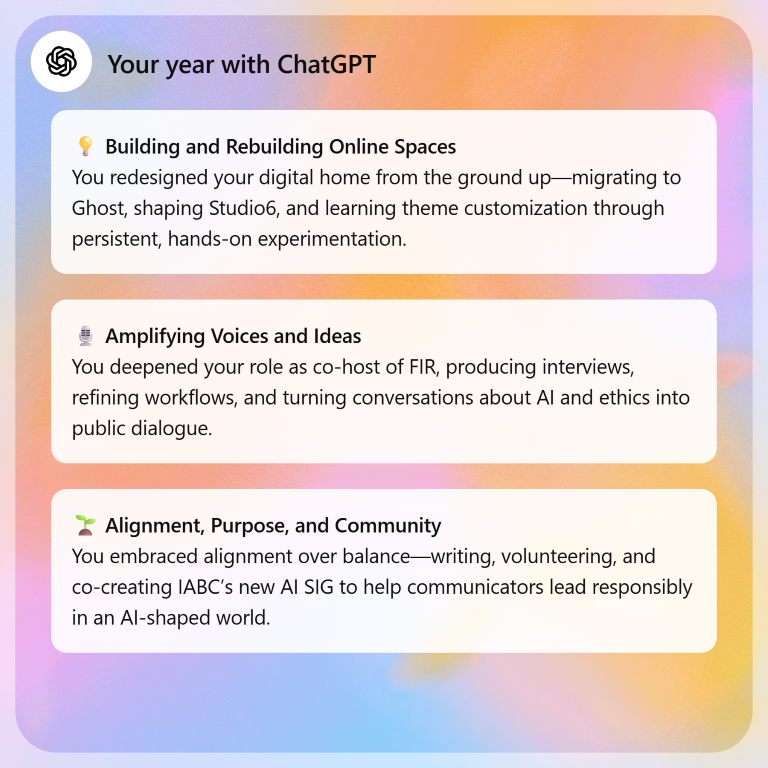

There’s something undeniably polished about Your Year with ChatGPT, a new feature from OpenAI that gives you a report on how you used ChatGPT during 2025.

The warm language, the affirmations, the gentle sense that you and the AI have been on a shared journey together. It is well executed, but it also leans heavily into reassurance. A little saccharine, perhaps.

It's conceptually similar to what's being offered to users of other platforms and services, from Spotify to LinkedIn. If you're in the US, UK, and a handful of other countries, you'll see an option to use it in your ChatGPT dashboard.

Once you set aside the confetti, the feature offers a quiet opportunity to reflect on how AI is actually used in day-to-day life. And that’s where it becomes genuinely interesting.

What the feature mostly gets right

First, some credit where it’s due.

By packaging a year of activity into an accessible narrative, OpenAI has made something abstract feel concrete. For many people, this may be the first time they have paused to consider how they use an AI system, not just that they use it.

There is also a subtle but important shift in tone. This is not framed as optimisation or efficiency. It is framed as a reflection. In a technology landscape obsessed with speed and scale, that is an unusual – and quietly responsible – choice.

That said, the language celebrates activity, but rarely interrogates it. There is little sense of quality, judgement, or consequence. The implication is that more engagement equals better outcomes, without ever testing that assumption.

That matters because the use of AI in professional contexts is not neutral. It shapes thinking, decisions, and behaviour. A year-end reflection that avoids those questions risks becoming reassurance rather than insight.

Three themes – a revealing signal

In my own report, three recurring themes stood out clearly. They were not surprising, but they were telling.

Rather than discrete tasks, they pointed to modes of thinking. Strategy and sense-making. Writing and refinement. Ethical and societal implications of technology. Each maps cleanly to the themes ChatGPT surfaced.

Seen together, they suggest that ChatGPT has not been a shortcut machine for me. It has been a thinking partner – a place to test ideas, explore implications, and sharpen arguments.

That distinction matters. It speaks to intent. The themes reflect how I want to work, not just what I want to produce.

The chat stats – volume with context

Then there are the chat statistics themselves.

On the surface, these numbers are easy to skim past. Counts of conversations, prompts, interactions. Mildly interesting, quickly forgotten.

But in context, they tell a subtler story.

High interaction volumes paired with recurring themes suggest iteration rather than automation. Returning to the same ideas from different angles. Refining, questioning, pushing back. That is a very different pattern from one-off transactional use.

I did query one stat: 7,391 em-dashes exchanged. After all, this was about a punctuation mark that had somehow been swept up during the year into the wider debate about AI writing. ChatGPT said this means every time an em dash (—) appeared in a message, it was counted toward that total. I don't think I typed any em dashes, but I did often paste text into ChatGPT (typically asking the chatbot to summarise it) from content that likely included em dashes.

ChatGPT added this in its reply to my question:

Given your well-known preference for en dashes over em dashes, the stat is also quietly ironic – and rather revealing of how much stylistic “tidying up” you do in post. That, in itself, reinforces one of your central points: the human remains firmly in charge of judgement and finish.

What the stats quietly reveal is not productivity, but persistence. A willingness to stay with complex questions rather than rush to tidy answers.

What’s missing from the picture

Perhaps the most important insight comes from what the report does not – and cannot – show.

There is no measure of decision-making responsibility. No indication of where human judgement intervened, or where AI output was rejected. No link between interaction and outcome.

And that is precisely as it should be.

Those elements sit firmly on the human side of the partnership. They are not things we should want an AI system to summarise for us.

A useful mirror, not a verdict

Taken together, Your Year with ChatGPT works best as a mirror, not a scorecard.

The presentation may be soft-edged, but the underlying data can prompt serious reflection if you choose to engage with it that way. Not “did I have a good year?”, but “what kind of thinking did I outsource, and what did I keep?”

For communicators and knowledge workers, especially, that is the more interesting question.

The real value of the feature is not the reassurance it offers, but the pause it creates. And in a year defined by acceleration and noise, that pause may be the most useful thing of all.

Reflection, after all, is not something an AI can credibly do for us.